· Sweady Team · Tutorials · 9 min read

How to Organize Effective Remote Code Reviews

Master the art of remote code reviews with proven strategies for distributed teams. Learn how to overcome time zone challenges, maintain code quality, and foster effective asynchronous communication.

In today’s increasingly distributed world, organizing effective code reviews across different time zones and locations has become a crucial skill for development teams. Remote work has transformed from a temporary solution to a permanent fixture in the tech industry, making remote code reviews an essential competency rather than an optional skill.

But let’s face it—reviewing code when your team is scattered across continents presents unique challenges that traditional office-based teams don’t face. The good news? With the right approach, remote code reviews can actually outperform their in-person counterparts in efficiency, thoroughness, and team satisfaction.

The Real Challenges of Remote Code Reviews

Before diving into solutions, let’s acknowledge the specific obstacles that make remote code reviews difficult:

1. Time Zone Puzzles

When your team spans multiple time zones, the traditional “let me just tap you on the shoulder” approach becomes impossible:

- Delayed Feedback Loops: A developer in San Francisco submits code at 5pm, but the reviewer in Tokyo won’t see it until the developer is fast asleep.

- Limited Overlap Windows: Teams with developers in Europe, America, and Asia might only share 1-2 hours of overlapping work time.

- Review Bottlenecks: When reviewers can’t pass work between time zones efficiently, PRs start piling up, creating development bottlenecks.

Real-world scenario: A developer in Berlin submits a critical fix at 4pm her time. The lead reviewer in New York is just starting his day and has 10 other PRs to review. By the time he gets to the Berlin developer’s code, she’s offline for the day, and any questions must wait until tomorrow—effectively adding a 24-hour delay to the process.

2. Communication Hurdles

Remote work strips away many communication channels we take for granted:

- Missing Context: In-office teams absorb context through osmosis—overhearing discussions, noticing team focus areas, sensing priorities. Remote teams miss this ambient awareness.

- Limited Expression: Text-based feedback lacks tone, facial expressions, and the ability to quickly sketch alternatives.

- Cultural and Language Differences: Distributed teams often cross cultural boundaries, introducing different communication styles and potential misunderstandings.

3. Technical Fragmentation

Remote teams frequently deal with:

- Environment Inconsistencies: “It works on my machine” takes on new meaning when team members use different operating systems, IDE configurations, and local setups.

- Varying Connection Speeds: A screen sharing session that works perfectly on fiber optic internet might be unusable for team members with less reliable connections.

- Tool Proliferation: Without careful coordination, teams can end up with fragmented toolchains, making it harder to maintain a smooth review process.

Building Your Remote Code Review Playbook

Let’s transform these challenges into opportunities with specific, actionable strategies:

1. Create a Code Review Constitution

Rather than vague review guidelines, develop a detailed “constitution” that eliminates ambiguity:

# Team Review Constitution

## Review SLAs

- Initial response: Within 24 hours (business days)

- High-priority fixes: Within 4 working hours

- Feature PRs: Complete review within 48 hours

## Required Elements

- All PRs must include updated tests

- Screenshots for UI changes

- Performance benchmarks for data-intensive changes

- Security review checklist for auth-related changes

## Review Scope Guidance

- Leave style feedback only if it contradicts our style guide

- Focus on logic, security, and performance issues

- Grammar/typo fixes should be made directly, not commented onThis level of detail prevents misalignment and reduces back-and-forth questions that drain time across time zones.

2. Master Asynchronous Communication

The key to successful remote reviews is embracing asynchronicity as a feature, not a bug:

Rich Pull Request Descriptions

Transform basic PR descriptions into comprehensive briefing documents:

Before:

Added user profile feature. Please review.After:

## User Profile Feature

This PR implements the user profile page as described in ticket PROJ-342.

### Implementation Notes

- Created new UserProfile component with responsive design

- Added profile data fetch with caching layer

- Implemented avatar upload with client-side image compression

### Testing Notes

- Manual testing completed across mobile and desktop

- Added 12 new unit tests covering core functionality

- Load tested with simulated database of 10,000 users

### Areas Needing Special Attention

- The image compression algorithm (lines 45-87) is complex

- Security review needed for the file upload implementation

- Performance review for the profile data caching approach

### Screenshots

[Before/After comparison images]This comprehensive approach drastically reduces questions and helps reviewers focus their attention where it matters most.

Video Walkthroughs

For complex changes, include a 3-5 minute screen recording using tools like Loom or CloudApp:

- Show the feature in action

- Explain architectural decisions

- Highlight areas of uncertainty

- Demonstrate test coverage

A brief video can eliminate hours of textual back-and-forth and provides context that code alone cannot convey.

3. Time Zone Intelligence

Don’t just work around time zones—leverage them strategically:

Follow-the-Sun Reviews

Implement a structured handoff process:

- Developer in Tokyo completes work and tags it for review

- Before signing off, they add detailed context in the PR description

- Reviewer in Berlin picks it up at the start of their day

- After review, they tag the original developer with specific questions

- The cycle continues with each time zone

This approach transforms time differences from a liability into a 24-hour development cycle.

Time Zone Visibility

Use tools that make time zones explicit:

- Add a team world clock to your shared dashboards

- Include local time indicators in chat applications

- Maintain a shared team calendar showing working hours

- Use tools like Timezone.io to visualize team availability

Optimized Synchronous Windows

For the few hours when teams do overlap:

- Schedule “review power hours” where team members prioritize review work

- Hold brief video standups focused specifically on blocked PRs

- Use pair reviewing for complex changes that benefit from discussion

4. Tool Ecosystem That Eliminates Friction

The right tools can compensate for many remote challenges:

Must-Have Integrations

Build a cohesive review ecosystem:

- GitHub/GitLab + Slack: Automatic notifications for new PRs, comments, and CI status

- CI/CD + Review Process: Automated tests must pass before human review begins

- Code Quality Tools: Integrate SonarQube, ESLint, or similar tools to handle mechanical aspects of review

- Automated Documentation: Tools like Swimm that keep code and documentation in sync

Visual Collaboration Tools

For complex discussions that text can’t adequately capture:

- Excalidraw or Miro for impromptu diagrams

- Figma for UI discussions

- CodeSandbox for live code experimentation

- VS Code LiveShare for pair reviewing

5. Structure PRs for Review Success

The way you structure code changes dramatically impacts review quality:

Size Matters

Research has consistently shown that review effectiveness drops dramatically as change size increases:

- Keep PRs under 400 lines of code when possible

- For larger features, use feature flags to enable incremental reviews

- Consider stacked PRs for dependent changes

Logical Commits

Structure your commits to tell a story:

- Add user model schema (23 lines)

- Implement basic CRUD operations (87 lines)

- Add validation logic (45 lines)

- Create REST API endpoints (95 lines)

- Add authentication middleware (58 lines)This approach lets reviewers understand your change incrementally, rather than as a monolithic block.

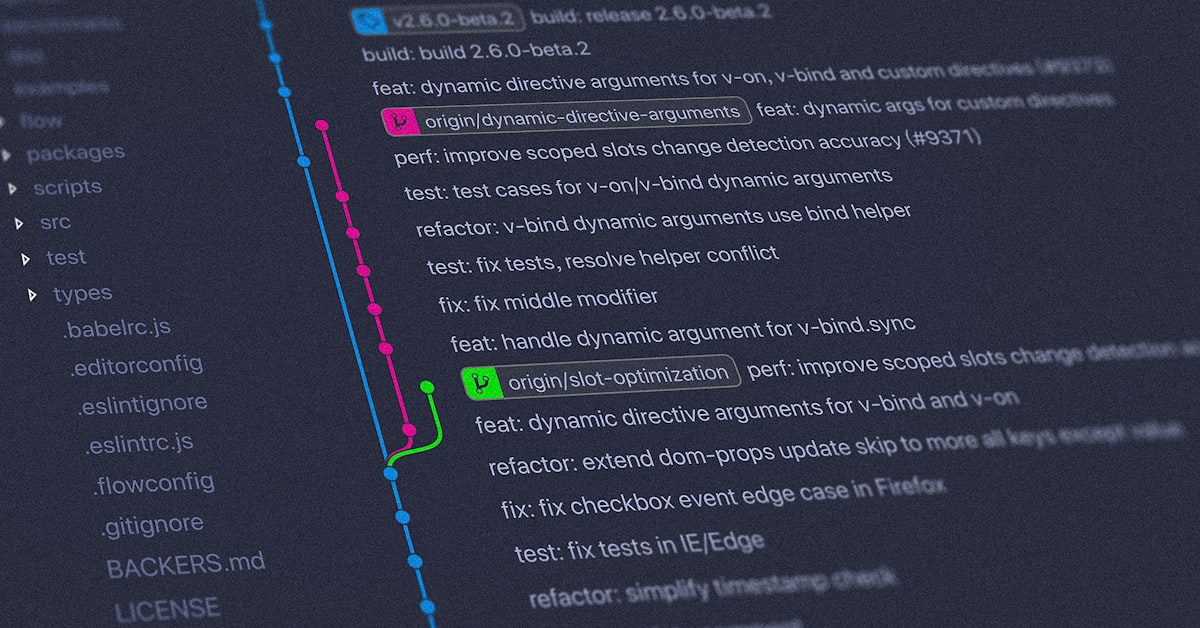

Smart Branching Strategies

Use branching strategies that support effective reviews:

- Short-lived feature branches to prevent drift

- Trunk-based development with feature flags for large changes

- Regular rebasing to maintain a clean history

Communication Techniques That Bridge the Distance

The Art of Textual Feedback

Remote reviews rely heavily on written communication, so mastering this medium is essential:

Be Specific and Actionable

Ineffective feedback:

This implementation seems inefficient.Effective feedback:

The user search function currently queries the database on each keystroke. Consider:

1. Adding a 300ms debounce to reduce query volume

2. Implementing client-side caching for recent results

3. Using the existing UserSearchIndex for better performanceUse Markdown Effectively

Leverage formatting to make your comments more scannable and precise:

### Performance Concern

The current implementation has O(n²) complexity because:

- The outer loop iterates through all users

- For each user, we perform a full array scan

**Suggested alternative:**

```javascript

// Use a map to achieve O(n) performance

const userMap = new Map();

users.forEach((user) => userMap.set(user.id, user));

```Ask Questions Instead of Making Statements

Phrase feedback as questions when appropriate:

Statement (can seem confrontational):

This code doesn't handle the empty array case.Question (invites collaboration):

How does this code behave when the input array is empty? Should we add a check there?Visual Communication for Complex Concepts

When text isn’t enough, use visual tools:

- Architecture diagrams for system-level discussions

- Sequence diagrams for complex interactions

- Annotated screenshots for UI feedback

- Performance graphs for optimization discussions

Tools like Excalidraw or Mermaid can be embedded directly in PR comments or documentation.

Measuring and Improving Your Process

What gets measured gets improved. Track these metrics to gauge your remote review effectiveness:

Key Metrics to Watch

- Time to First Review: How long PRs wait before receiving initial feedback

- Review Cycles: Average number of back-and-forth exchanges before approval

- Review Coverage: Percentage of changed lines that received comments

- Defect Escape Rate: How many bugs make it past review

- Developer Satisfaction: Regular surveys about the review process

Creating a Feedback Loop

- Measure your current process (establish a baseline)

- Implement specific improvements

- Measure again after 2-4 weeks

- Share results with the team

- Repeat

Automation: Your 24/7 Review Assistant

In remote teams, automation isn’t just convenient—it’s essential for maintaining velocity:

Beyond Basic CI

- Automated Code Formatting: Eliminate style discussions with tools like Prettier or Black

- Static Analysis: Use language-specific analyzers (ESLint, RuboCop, etc.) configured to your team standards

- Security Scanning: Integrate tools like Snyk or GitHub Security Alerts

- Dependency Updates: Automate with Dependabot or similar tools

- Documentation Checks: Verify API documentation coverage

Custom Automations Worth Building

These team-specific automations can dramatically improve remote reviews:

- PR Templates: Create context-rich templates that prompt for necessary information

- Review Assignment Algorithms: Build smart assignment based on code ownership, workload, and time zones

- PR Summary Bots: Automatically generate summaries of large PRs for easier review

- Review Dashboard: Build custom views showing review status across teams

Conclusion: From Challenge to Competitive Advantage

Remote code reviews don’t have to be a necessary evil—they can become your team’s secret weapon. By thoughtfully addressing each challenge with specific strategies, you can create a review process that:

- Maintains consistently high code quality

- Leverages global talent across time zones

- Creates clear, searchable documentation through review discussions

- Builds a stronger team culture despite physical distance

The most successful remote teams don’t just replicate office-based processes online—they reimagine work to leverage the unique advantages of distributed collaboration.

Start by implementing one or two strategies from this guide, measure the results, and expand from there. With patience and iteration, your team can transform remote code reviews from a challenge into a competitive advantage.

Remember that at its core, effective code review isn’t about the tools or processes—it’s about creating an environment of trust, learning, and collaboration that spans geographical boundaries. When your team members feel supported and empowered, great reviews will follow, regardless of where they call home.

Sweady

Sweady